I’ve written previously about the pseudo-science underlying the promotion of oxaloacetate (OAA, yes the Krebs’ cycle intermediate) as a dietary supplement. The TL/DR, is that the company involved (Terra Biological) touted OAA as a treatment for various diseases, based on model organism data (worms and mice), which earned them a warning letter from the FDA. The “evidence” was published in predatory journals (many of which don’t exist any more) with minimal conflict-of-interest disclosures. Couple with the ridiculous doses you’d need in humans to achieve the same level as used in animals, and a number of fundamental misunderstandings about the basic biochemistry of OAA, and you can see why I rightfully called it “shenanigans”.

Shortly after that post was published, the company’s CEO Alan Cash called me on the ‘phone, threatening to sue if I didn’t take it down. I declined and asked him to point out exactly what in the post was untrue or would form the basis for a lawsuit. I never heard back from his lawyers.

More OAA Garbage – now with added “Clinical Trials”

Recently, I was alerted to a new paper reporting on a clinical trial (I use the term loosely) for the use of OAA as a therapy for PMS. The senior author is Alan Cash, and let’s just say there are a number of issues with this paper, which should give pause to anyone considering wasting their money on Jubilance for PMS…

(1) The study allegedly took place at the “Energy Medicine Institute” in Boulder CO. A quick search shows that the website has lapsed and been taken over for advertising. The business address (27 Arrowleaf Ct., Boulder, CO 80304) shows up on Google maps as a house in a residential neighborhood. The business is registered under an SIC code (Standard Industrial Classification system) of 7311, which is advertising agency. There are several other organizations with similar names (e.g., Energy Medicine Research Institute and Energy Medicine Institute), but the lead author on the paper is Lisa Tully PhD, who appears to have died recently in Boulder CO, so I guess that tells us which one we’re dealing with.

(2) The only MD on the paper is John Humiston MD from the “Center for Health and Wellbeing” (no address or location given). There’s a John Humiston who, according to QuackWatch is known for injecting urine into patients in Mexico. Quackwatch says he’s on the staff of the San Diego Center for Health and Wellbeing, which is located near Terra Biological HQ. Notably Humiston is not listed on their site, but this does appear to be a medical facility so it’s possibly where the study actually took place.

(3) The methods section states “Institutional Review Board approval was obtained”. Academic medical institutions have their own IRBs, but others have to go to a commercial IRB. In this case, it appears they used ICRM, which seems to be a preferred venue for quack stem-cell therapies. It’s not very professionally run (e.g., the company blog includes a story about the Mayo Clinic… in Rochester NY!) Their client portal and online submission system is “coming soon”. Notably, nowhere in the paper is it stated how the patients were recruited.

(4) Looking at the part A vs. part B of the study (Table 2) they say the reason they did B is because there was a “carry over” effect in part A, where the patients who got the drug first ended up showing significant improvement even if they were later on the placebo. What’s interesting is the 4 scores in the placebo group were 25.9, 21.4, 24.0, and 6.5. The funny thing is, when they did the study the 2nd time, the numbers were 28.1, 15.0, 15.1, and 6.2 in the placebo group. The only thing that really changed was the p values came down. So, the “significant effect” in the placebo group actually became even more significant when they received the placebo first and then the drug. This indicates there was a massive placebo effect. The second study (part B) was designed to “overcome the carry over effect”, but all it did was prove that the reason they saw an effect in the placebo group was NOT due to carry over!

(5) There are several typos indicating these folks have no clue what they’re talking about when it comes to basic research (and the fact these were not picked up during peer review tells us about the quality of editing by the journal). For example in the discussion they refer to “C75B1/6” mice. WTF?

(6) The statistical methods are weird. Looking at one of the scores (Becks Depression) in Table 1, they state the baseline errors as standard deviation (SD, 27.8 +/- 11.3). But when it comes to the results (Table 2), they show the numbers in percentages and they give standard errors (SE). The SE is the standard deviation divided by the square root of the number of samples. In part A they had 26 patients, so we you can back calculate from their SE value to get a SD. Doing that, the percent change is not 52.2 +/- 8.8% as they have it listed in Table 2, it’s actually 52.2 +/- 41.3% (using SD instead of SE). This indicates the effects were highly variable. Using these numbers to convert the percent changes back into real units, we can calculate the actual Becks Depression score for both groups. At baseline it was 27.8+/-11.3 as they state (mean +/- SD). For the OAA group it was 42.3+/-33.5. Further calculating the 95% confidence intervals, the baseline group would range between 23.5 and 32.1, while the OAA group would be 28.3 to 56.3. As such, there’s no way these are significant by ANOVA. That probably explains why they chose not to use ANOVA to analyze the data.

(7) Lastly, why is this buried in an obscure Korean journal without an official impact factor? The journal recommends but does not require a data sharing statement and policy, but there is none for this paper.

But there’s more… OAA and COVID!

Basking in the glory of a clinical trial, it was only a matter of time before the COVID bandwagon would be jumped on. Thus, we find ourselves in the unfortunate situation of viewing this paper, reporting on another clinical trial for the use of OAA to treat chronic fatigue syndrome/myalgic encephalomyelitis (CFS/ME) and long-COVID fatigue.

In general, CFS/ME is a very poorly defined and poorly understood set of disease symptoms, for which there is currently no cure. As such, people suffering from this syndrome are often willing to try anything, including $500 a bottle supplements (FYI, PubChem lists 62 suppliers of OAA, for those interested).

In terms of what’s wrong with this paper, an anonymous commenter on PubPeer has already raised numerous issues, including undisclosed conflicts of interest, deviation from pre-registered trail endpoints, missing data, and several other problems. The author of the PubPeer critique cites my previous post, agreeing that many of the claims made regarding OAA as a therapeutic are at odds with basic biochemistry principles.

There are additional problems to flag with this study:

(1) The paper lists the affiliation of its senior author David Lyons Kaufman, MD, as the “Center for Complex Diseases, Seattle WA, USA.” Such a business with a person of the same name does really exist, but it’s based in Mountain View CA, not Seattle.

There’s a David L. Kaufman in Seattle, who appears to be affiliated with the “International Peptide Society.” This organization appears to promote the use of “peptide therapeutics” without ever stating what the peptides are. They offer all kinds of educational events and certification in the use of “peptides” in regenerative therapy, but their website is devoid of ANY peer-reviewed scientific literature. Their “Find a Practitioner” page is a laundry list of new age quackery – seriously, do not jump down that rabbit hole!

(2) The National Clinical Trials Database page for the CFS/ME trial lists our old friend Lisa Tully as the Principal Investigator, and the Energy Medicine Institute only location for the trial. David Kaufman is not listed anywhere on the NCT page. Surely a big change such as the lead investigator in Colorado dying, and then moving the trial site to Seattle (or is it Mountain View) would warrant an update to the NCT database? As such, it’s not exactly clear where this trial took place.

(3) Mor e company PR about the use of OAA for CFS/ME claims that the reason Kaufman got interested in OAA is he saw it was on a list of metabolites that declined in CFS/ME patients, in a 2016 metabolomics study. The problem is, a detailed look at the data reveals that the decline in OAA was not statistically significant after correcting for false discovery rate (Q value vs. P value). The actual data are on the left (red is controls, blue/green is CFS patients), showing essentially no effect. OAA didn’t even make it onto the bottom of the chart for “relative importance” of metabolite changes (Figure 3 of the paper). Another metabolomics study of CFS (published in a better journal) did not find anything relating to OAA. As such, there is no credible evidence that OAA levels are lower in CFS/ME patients, so the entire premise for the study falls flat.

e company PR about the use of OAA for CFS/ME claims that the reason Kaufman got interested in OAA is he saw it was on a list of metabolites that declined in CFS/ME patients, in a 2016 metabolomics study. The problem is, a detailed look at the data reveals that the decline in OAA was not statistically significant after correcting for false discovery rate (Q value vs. P value). The actual data are on the left (red is controls, blue/green is CFS patients), showing essentially no effect. OAA didn’t even make it onto the bottom of the chart for “relative importance” of metabolite changes (Figure 3 of the paper). Another metabolomics study of CFS (published in a better journal) did not find anything relating to OAA. As such, there is no credible evidence that OAA levels are lower in CFS/ME patients, so the entire premise for the study falls flat.

(4) In that same PR piece, it’s notable that Kaufman is talking about results from ongoing trials in CFS/ME patients in October of 2021. The published study claims recruitment began in February 2021, and the two sets of patient characteristics have some striking similarities:

The PR post states 52 patients across 2 different doses (500mg or 1000mg twice daily), average age 49, 77% women, and an average 25% drop in fatigue score at 6 weeks. The published study reports 76 patients, addition of a 3rd dose group (1000mg 3x daily), average age 47, 74% women, and a reduction in fatigue of 22.5% to 27.9%.

It is therefore reasonable to assume the patients being discussed in the October 2021 PR piece have significant overlap with those in the final published paper in June 2022. The only addition for the paper is the long COVID group, which makes the final sentence of the PR piece particularly interesting…

“OAA studies are being pursued in Alzheimer’s, ALS, myasthenia gravis, cancer, ME/CFS and long COVID. The long-COVID double-armed, randomized, double-blinded placebo-controlled trial is being funded by the same company (Terra Biological) that provided the supplement to Kaufman.”

Clearly the long COVID study that ended up being published here was not double-blinded or placebo-controlled. Clearly the statement on the NCT database page that the study would be double-blinded and placebo-controlled, was not followed through on. The fact that the entire NCT page for this published study is about COVID patients, and doesn’t actually mention non-COVID related CFS/ME at all, is also a problem. There does appear to be another clinical trial registered for OAA in CFS/ME, but it only just started recruiting patients. The CFS/ME patients who took part in the trial reported here, were outside the boundaries of the NCT registered trial for long COVID patients. Treating patients first and then registering the trial later, is definitely not kosher!

Despite several red flags regarding these trials, news outlets continue to regurgitate the company’s PR claims. Terra Biological continues to dance at the boundaries of actual rigorous clinical trials and medicine, by doing things that look sort-of like trials to the genera public, while collaborating with weird quackery institutes, dosing patients outside the boundaries of what they register with the NCT database, and then publishing the data in journals that don’t seem to give a crap about rigor.

As I have stated before and will do so again – run, don’t walk, away!

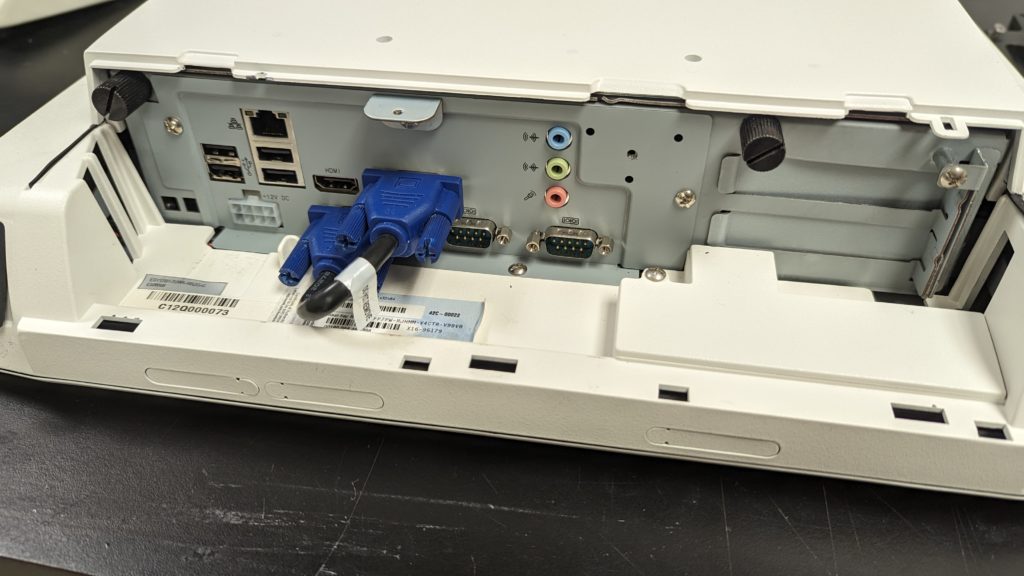

A rotary evaporator (RotaVap) is a core piece of equipment for any lab that does chemical synthesis, and Buchi make some of the best ones. Unfortunately, ours is about 25 years old and they no longer make parts for it.

A rotary evaporator (RotaVap) is a core piece of equipment for any lab that does chemical synthesis, and Buchi make some of the best ones. Unfortunately, ours is about 25 years old and they no longer make parts for it.

The result is the motor spins but the plastic rung just grinds around and doesn’t spin the metal part that’s eventually attached to the flask. The worm gear is basically slipping once per rotation. We tried buying a used model off eBay, and upon dissection it had exactly the same problem, so it appears this is a common fault on this particular model (RE-111).

The result is the motor spins but the plastic rung just grinds around and doesn’t spin the metal part that’s eventually attached to the flask. The worm gear is basically slipping once per rotation. We tried buying a used model off eBay, and upon dissection it had exactly the same problem, so it appears this is a common fault on this particular model (RE-111).